A warmup cache request is one of those technical mechanisms that rarely enters public conversation yet quietly shapes everyday digital experiences. In essence, it is a deliberate request made to a system before real users arrive, designed to populate caches with important data so that the first human interaction does not suffer from delays. In environments where milliseconds matter web applications, APIs, content delivery networks, and large-scale cloud platforms this preparation can be the difference between perceived reliability and immediate frustration.

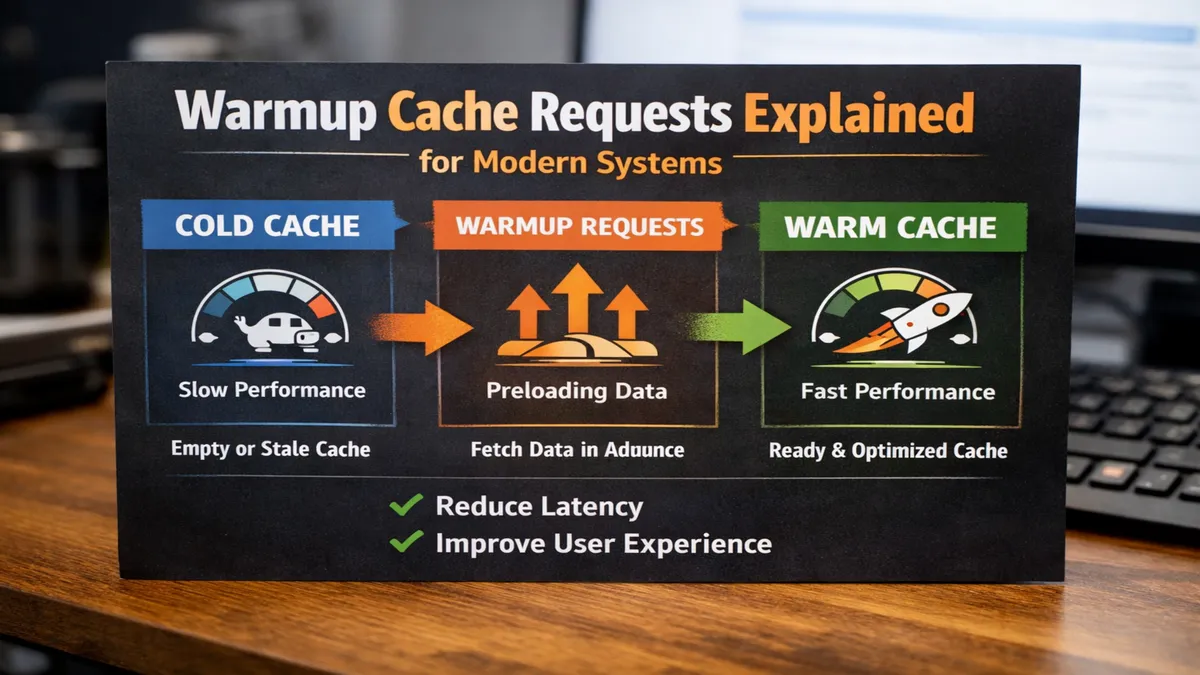

When a system starts fresh or a cache expires, it enters what engineers call a cold cache state. In this state, every request must reach deeper layers of infrastructure, such as databases or origin servers, resulting in higher latency and heavier load. A warmup cache request moves the system into a warm cache state ahead of time by triggering the same retrieval paths that real users would invoke. As a result, the cache is already populated when genuine traffic begins.

In the first moments after a deployment, restart, or cache purge, user experience is most vulnerable. Warmup cache requests address this fragile window by smoothing the transition from empty memory to optimal performance. They are not shortcuts or hacks; rather, they are intentional, disciplined techniques grounded in system design principles that prioritize predictability and resilience. Understanding how they work, when they are necessary, and how they can fail is essential for anyone building or managing performance-sensitive digital systems.

Understanding the Concept of Cache Warming

Cache warming refers to the broader practice of intentionally loading data into a cache before it is requested organically. A warmup cache request is the operational action that makes this practice concrete. Instead of waiting for unpredictable user behavior to determine what gets cached, engineers proactively decide which resources are most critical and request them in advance.

The importance of this approach becomes clearer when contrasted with passive caching. In passive caching, content is cached only after users request it. The first user always pays the performance penalty. Cache warming shifts that cost away from real users and into controlled, automated processes that can be scheduled, throttled, and monitored.

In distributed systems, the concept grows more complex. Each cache layer—browser cache, application cache, CDN edge cache, database cache—may require its own warmup strategy. A single warmup cache request might populate one layer while leaving others untouched. Effective warming, therefore, requires a clear understanding of cache boundaries and responsibilities.

Cold Cache Versus Warm Cache Behavior

The distinction between cold and warm cache behavior is foundational to understanding why warmup cache requests exist at all. A cold cache is empty or minimally populated. Requests during this phase tend to be slower, more expensive, and less predictable. Backend services must perform full computations, database queries, or origin fetches.

A warm cache, by contrast, already holds frequently accessed data. Requests are served directly from memory or edge storage, drastically reducing response time and backend workload. Warmup cache requests are the bridge between these two states, deliberately transforming cold caches into warm ones before users encounter them.

This difference is not merely technical; it has direct business implications. Slow initial responses can skew performance metrics, increase bounce rates, and distort load-testing results. By ensuring that caches are warm from the outset, organizations gain a more accurate picture of how systems behave under normal conditions.

How Warmup Cache Requests Work

At a technical level, a warmup cache request looks like any other request. It follows the same protocols, headers, and authentication rules. The difference lies in intent and timing. These requests are generated by scripts, scheduled jobs, deployment pipelines, or monitoring systems rather than by human users.

When such a request reaches the system, the cache layer evaluates whether the requested resource is already present. If it is not, the system fetches the data from its source, processes it if necessary, and stores the result in the cache according to predefined policies. Subsequent requests—whether warmup-driven or user-driven—benefit from that cached response.

Because caches are often geographically distributed, especially in content delivery networks, warmup requests may need to be region-aware. A request routed through one geographic endpoint may warm only that specific cache node. Comprehensive warmup strategies therefore simulate access from multiple regions or leverage platform features that orchestrate warming across the network.

Common Techniques for Cache Warmup

There is no single universal method for warming caches. Instead, teams choose techniques based on scale, complexity, and traffic patterns.

Manual preloading is the simplest approach. Developers or operators manually trigger requests to key pages or endpoints. This method is practical for small systems but quickly becomes unmanageable at scale.

Automated warmers are more common in production environments. These tools generate requests based on predefined lists, sitemaps, or configuration files. They can be integrated into deployment workflows so that caches are warmed immediately after a new release.

Predictive warming takes the concept further by analyzing historical traffic patterns. Instead of warming everything, the system warms only the most frequently accessed or business-critical resources. This approach balances performance benefits with resource efficiency.

Event-driven warmup strategies trigger cache warming in response to specific events, such as a cache purge, system restart, or anticipated traffic spike. This ensures that warmup occurs precisely when it is needed, rather than on a fixed schedule.

Benefits of Warmup Cache Requests

The most immediate benefit of warmup cache requests is reduced latency. Users experience faster response times from their very first interaction, rather than only after caches naturally fill.

Another significant advantage is reduced backend load. By serving requests from cache instead of origin systems, warmup reduces database queries, computation overhead, and network traffic. This can be particularly valuable during high-traffic events or immediately after deployments.

Warmup cache requests also contribute to performance stability. Instead of unpredictable spikes in response time during cold starts, systems exhibit smoother, more consistent behavior. This stability improves observability, making metrics and alerts more meaningful.

Finally, proactive cache warming can improve the accuracy of load tests and monitoring. Tests run against cold caches often produce pessimistic results that do not reflect real-world performance. Warmed caches provide a more realistic baseline.

Expert Perspectives on Proactive Caching

System architects often emphasize that cache warming is not about maximizing cache size but about maximizing relevance. Warming the wrong content wastes resources and can even evict more valuable data.

Performance engineers note that warmup cache requests are especially critical in distributed environments, where latency is influenced by geography as much as computation. Proactively warming edge caches helps deliver consistent experiences across regions.

Operational teams highlight the importance of automation. Manual warmup processes are error-prone and difficult to scale. Automated, monitored warmup workflows ensure repeatability and reduce human intervention during critical moments like deployments.

Challenges and Risks

Despite its advantages, cache warming introduces its own set of challenges. One of the most common risks is excessive resource consumption. Warmup requests generate traffic, consume bandwidth, and place load on origin systems. If poorly controlled, they can negate the very benefits they aim to provide.

Another challenge lies in cache invalidation. Cached data has a limited lifespan. If warmup processes do not align with expiration policies, they may populate caches with data that becomes stale too quickly, leading to inconsistencies.

Over-warming is another subtle risk. Attempting to warm every possible resource can overwhelm caches and reduce hit ratios for truly important content. Effective warmup requires careful prioritization.

Finally, distributed systems complicate visibility. It can be difficult to verify whether caches are truly warm across all nodes, making monitoring and observability essential components of any warmup strategy.

Practical Use Cases

Warmup cache requests are commonly used after deployments, when application servers restart and in-memory caches are cleared. By warming caches immediately, teams avoid exposing users to degraded performance during the post-deployment window.

They are also valuable before marketing campaigns or product launches, where sudden traffic spikes are expected. Pre-warming ensures that infrastructure is ready before demand arrives.

In API-driven systems, warmup cache requests help stabilize response times for frequently called endpoints, especially those involving complex calculations or database joins.

Takeaways

- Warmup cache requests proactively populate caches before real users arrive

- They reduce latency, backend load, and performance variability

- Effective warmup requires prioritization of high-value resources

- Automation and monitoring are critical for scalable implementations

- Poorly designed warmup strategies can waste resources or introduce risk

Conclusion

Warmup cache requests occupy a quiet but powerful position in modern system design. They do not change what a system does, but they profoundly influence how well it does it under real-world conditions. By shifting the cost of cache misses away from users and into controlled, automated processes, warmup strategies help systems deliver speed, stability, and predictability from the very first request.

As digital infrastructure continues to scale and distribute across regions, the importance of proactive caching will only grow. Warmup cache requests are not optional optimizations for elite systems; they are becoming foundational practices for any platform that values performance and user trust. When applied thoughtfully, they transform caching from a passive convenience into an active, strategic asset.

FAQs

What is a warmup cache request?

It is a deliberate request sent to a system before real users arrive to populate caches and avoid cold-start performance penalties.

When should cache warming be used?

Cache warming is most useful after deployments, restarts, cache purges, or before anticipated traffic spikes.

Is cache warming always beneficial?

Not always. Small systems or low-traffic applications may not justify the overhead of warming caches.

Can warmup requests cause problems?

Yes. Poorly designed warmup processes can waste resources, overload systems, or cache stale data.

How are warmup cache requests automated?

They are typically automated using scripts, deployment pipelines, or monitoring tools that trigger requests based on events or schedules.

References

- GeeksforGeeks. (2023). What is cache warming in system design.

- GeeksforGeeks. (2023). Cold cache vs warm cache explained.

- Medium Engineering. (2022). How to handle cache warming like a pro.

- OneWebCare. (2024). Warmup cache request explained.

- VergeCloud. (2024). Warmup cache requests and CDN performance.